Contents

herding cats

This is a log of me going through Cats, a functional programming library for Scala. I’ll try to follow the structure of learning Scalaz that I wrote in 2012 (!).

Cats’ website says the name is a playful shortening of the word “category.” Some people say coordinating programmers is like herding cats (as in attempting to move dozens of them in a direction like cattle, while cats have their own ideas), and it seems to be true at least for those of us using Scala. Keenly aware of this situation, the cats list approachability as their first motivation.

Cats also looks interesting from the technical perspective. With its approachability and Erik Asheim (@d6/@non)’s awesomeness, people are gathering around them with new ideas such as Michael Pilquist (@mpilquist)’s simulacrum and Miles Sabin (@milessabin)’s deriving typeclasses. Hopefully I’ll learn more in the coming days.

day 0

This is a web log of me learning Cats, based a log of me learning Scalaz. It’s sometimes called a tutorial, but you should treat it more like a travel log that was scribbled together. If you want to learn the stuff, I suggest you read books and go through the examples yourself.

Before we dive into the details, here is a prequel to ease you in.

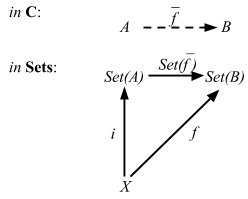

Intro to Cats

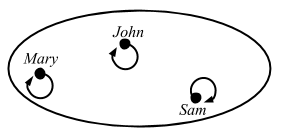

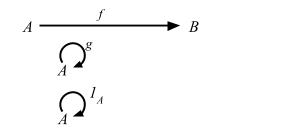

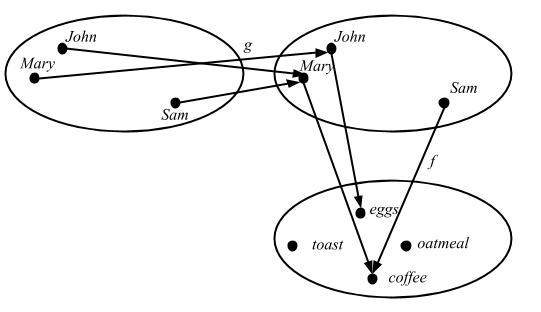

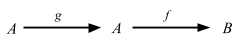

I’m going to borrow material from Nick Partridge’s Scalaz talk given at Melbourne Scala Users Group on March 22, 2010:

Scalaz talk is up - http://bit.ly/c2eTVR Lots of code showing how/why the library exists

— Nick Partridge (@nkpart) March 28, 2010

Cats consists of two parts:

- New datatypes (

Validated,State, etc) - Implementation of many general functions you need (ad-hoc polymorphism, traits + implicits)

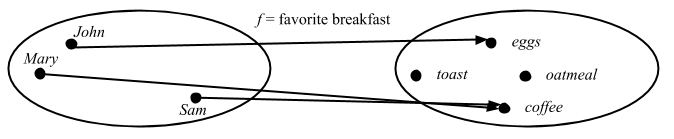

What is polymorphism?

Parametric polymorphism

Nick says:

In this function

head, it takes a list ofA’s, and returns anA. And it doesn’t matter what theAis: It could beInts,Strings,Oranges,Cars, whatever. AnyAwould work, and the function is defined for everyAthat there can be.

def head[A](xs: List[A]): A = xs(0)

head(1 :: 2 :: Nil)

// res1: Int = 1

case class Car(make: String)

head(Car("Civic") :: Car("CR-V") :: Nil)

// res2: Car = Car(make = "Civic")

Haskell wiki says:

Parametric polymorphism refers to when the type of a value contains one or more (unconstrained) type variables, so that the value may adopt any type that results from substituting those variables with concrete types.

Subtype polymorphism

Let’s think of a function plus that can add two values of type A:

def plus[A](a1: A, a2: A): A = ???

Depending on the type A, we need to provide different definition for what it means to add them.

One way to achieve this is through subtyping.

trait PlusIntf[A] {

def plus(a2: A): A

}

def plusBySubtype[A <: PlusIntf[A]](a1: A, a2: A): A = a1.plus(a2)

We can at least provide different definitions of plus for A.

But, this is not flexible since trait Plus needs to be mixed in at the time of defining the datatype.

So it can’t work for Int and String.

Ad-hoc polymorphism

The third approach in Scala is to provide an implicit conversion or implicit parameters for the trait.

trait CanPlus[A] {

def plus(a1: A, a2: A): A

}

def plus[A: CanPlus](a1: A, a2: A): A = implicitly[CanPlus[A]].plus(a1, a2)

This is truely ad-hoc in the sense that

- we can provide separate function definitions for different types of

A - we can provide function definitions to types (like

Int) without access to its source code - the function definitions can be enabled or disabled in different scopes

The last point makes Scala’s ad-hoc polymorphism more powerful than that of Haskell. More on this topic can be found at Debasish Ghosh @debasishg’s Scala Implicits : Type Classes Here I Come.

Let’s look into plus function in more detail.

sum function

Nick demonstrates an example of ad-hoc polymorphism by gradually making sum function more general, starting from a simple function that adds up a list of Ints:

def sum(xs: List[Int]): Int = xs.foldLeft(0) { _ + _ }

sum(List(1, 2, 3, 4))

// res0: Int = 10

Monoid

If we try to generalize a little bit. I’m going to pull out a thing called

Monoid. … It’s a type for which there exists a functionmappend, which produces another type in the same set; and also a function that produces a zero.

object IntMonoid {

def mappend(a: Int, b: Int): Int = a + b

def mzero: Int = 0

}

If we pull that in, it sort of generalizes what’s going on here:

def sum(xs: List[Int]): Int = xs.foldLeft(IntMonoid.mzero)(IntMonoid.mappend)

sum(List(1, 2, 3, 4))

// res2: Int = 10

Now we’ll abstract on the type about

Monoid, so we can defineMonoidfor any typeA. So nowIntMonoidis a monoid onInt:

trait Monoid[A] {

def mappend(a1: A, a2: A): A

def mzero: A

}

object IntMonoid extends Monoid[Int] {

def mappend(a: Int, b: Int): Int = a + b

def mzero: Int = 0

}

What we can do is that sum take a List of Int and a monoid on Int to sum it:

def sum(xs: List[Int], m: Monoid[Int]): Int = xs.foldLeft(m.mzero)(m.mappend)

sum(List(1, 2, 3, 4), IntMonoid)

// res4: Int = 10

We are not using anything to do with

Inthere, so we can replace allIntwith a general type:

def sum[A](xs: List[A], m: Monoid[A]): A = xs.foldLeft(m.mzero)(m.mappend)

sum(List(1, 2, 3, 4), IntMonoid)

// res6: Int = 10

The final change we have to take is to make the

Monoidimplicit so we don’t have to specify it each time.

def sum[A](xs: List[A])(implicit m: Monoid[A]): A = xs.foldLeft(m.mzero)(m.mappend)

{

implicit val intMonoid = IntMonoid

sum(List(1, 2, 3, 4))

}

// res8: Int = 10

Nick didn’t do this, but the implicit parameter is often written as a context bound:

def sum[A: Monoid](xs: List[A]): A = {

val m = implicitly[Monoid[A]]

xs.foldLeft(m.mzero)(m.mappend)

}

{

implicit val intMonoid = IntMonoid

sum(List(1, 2, 3, 4))

}

// res10: Int = 10

Our

sumfunction is pretty general now, appending any monoid in a list. We can test that by writing anotherMonoidforString. I’m also going to package these up in an object calledMonoid. The reason for that is Scala’s implicit resolution rules: When it needs an implicit parameter of some type, it’ll look for anything in scope. It’ll include the companion object of the type that you’re looking for.

trait Monoid[A] {

def mappend(a1: A, a2: A): A

def mzero: A

}

object Monoid {

implicit val IntMonoid: Monoid[Int] = new Monoid[Int] {

def mappend(a: Int, b: Int): Int = a + b

def mzero: Int = 0

}

implicit val StringMonoid: Monoid[String] = new Monoid[String] {

def mappend(a: String, b: String): String = a + b

def mzero: String = ""

}

}

def sum[A: Monoid](xs: List[A]): A = {

val m = implicitly[Monoid[A]]

xs.foldLeft(m.mzero)(m.mappend)

}

sum(List("a", "b", "c"))

// res12: String = "abc"

You can still provide different monoid directly to the function. We could provide an instance of monoid for

Intusing multiplications.

val multiMonoid: Monoid[Int] = new Monoid[Int] {

def mappend(a: Int, b: Int): Int = a * b

def mzero: Int = 1

}

// multiMonoid: Monoid[Int] = repl.MdocSession3@1dff4342

sum(List(1, 2, 3, 4))(multiMonoid)

// res13: Int = 24

FoldLeft

What we wanted was a function that generalized on

List. … So we want to generalize onfoldLeftoperation.

object FoldLeftList {

def foldLeft[A, B](xs: List[A], b: B, f: (B, A) => B) = xs.foldLeft(b)(f)

}

def sum[A: Monoid](xs: List[A]): A = {

val m = implicitly[Monoid[A]]

FoldLeftList.foldLeft(xs, m.mzero, m.mappend)

}

sum(List(1, 2, 3, 4))

// res1: Int = 10

sum(List("a", "b", "c"))

// res2: String = "abc"

sum(List(1, 2, 3, 4))(multiMonoid)

// res3: Int = 24

Now we can apply the same abstraction to pull out

FoldLefttypeclass.

trait FoldLeft[F[_]] {

def foldLeft[A, B](xs: F[A], b: B, f: (B, A) => B): B

}

object FoldLeft {

implicit val FoldLeftList: FoldLeft[List] = new FoldLeft[List] {

def foldLeft[A, B](xs: List[A], b: B, f: (B, A) => B) = xs.foldLeft(b)(f)

}

}

def sum[M[_]: FoldLeft, A: Monoid](xs: M[A]): A = {

val m = implicitly[Monoid[A]]

val fl = implicitly[FoldLeft[M]]

fl.foldLeft(xs, m.mzero, m.mappend)

}

sum(List(1, 2, 3, 4))

// res5: Int = 10

sum(List("a", "b", "c"))

// res6: String = "abc"

Both Int and List are now pulled out of sum.

Typeclasses in Cats

In the above example, the traits Monoid and FoldLeft correspond to Haskell’s typeclass.

Cats provides many typeclasses.

All this is broken down into just the pieces you need. So, it’s a bit like ultimate ducktyping because you define in your function definition that this is what you need and nothing more.

Method injection (enrich my library)

If we were to write a function that sums two types using the

Monoid, we need to call it like this.

def plus[A: Monoid](a: A, b: A): A = implicitly[Monoid[A]].mappend(a, b)

plus(3, 4)

// res0: Int = 7

We would like to provide an operator. But we don’t want to enrich just one type,

but enrich all types that has an instance for Monoid.

trait Monoid[A] {

def mappend(a: A, b: A): A

def mzero: A

}

object Monoid {

object syntax extends MonoidSyntax

implicit val IntMonoid: Monoid[Int] = new Monoid[Int] {

def mappend(a: Int, b: Int): Int = a + b

def mzero: Int = 0

}

implicit val StringMonoid: Monoid[String] = new Monoid[String] {

def mappend(a: String, b: String): String = a + b

def mzero: String = ""

}

}

trait MonoidSyntax {

implicit final def syntaxMonoid[A: Monoid](a: A): MonoidOps[A] =

new MonoidOps[A](a)

}

final class MonoidOps[A: Monoid](lhs: A) {

def |+|(rhs: A): A = implicitly[Monoid[A]].mappend(lhs, rhs)

}

import Monoid.syntax._

3 |+| 4

// res2: Int = 7

"a" |+| "b"

// res3: String = "ab"

We were able to inject |+| to both Int and String with just one definition.

Operator syntax for the standard datatypes

Using the same technique, Cats occasionally provides method injections for standard library datatypes like Option and Vector:

import cats._, cats.syntax.all._

1.some

// res5: Option[Int] = Some(value = 1)

1.some.orEmpty

// res6: Int = 1

But most operators in Cats are associated with typeclasses.

I hope you could get some feel on where Cats is coming from.

day 1

typeclasses 101

Learn You a Haskell for Great Good says:

A typeclass is a sort of interface that defines some behavior. If a type is a part of a typeclass, that means that it supports and implements the behavior the typeclass describes.

Cats says:

We are trying to make the library modular. It will have a tight core which will contain only the typeclasses and the bare minimum of data structures that are needed to support them. Support for using these typeclasses with the Scala standard library will be in the

stdproject.

Let’s keep going with learning me a Haskell.

sbt

Here’s a quick build.sbt to play with Cats:

val catsVersion = "2.4.2"

val catsCore = "org.typelevel" %% "cats-core" % catsVersion

val catsFree = "org.typelevel" %% "cats-free" % catsVersion

val catsLaws = "org.typelevel" %% "cats-laws" % catsVersion

val catsMtl = "org.typelevel" %% "cats-mtl-core" % "0.7.1"

val simulacrum = "org.typelevel" %% "simulacrum" % "1.0.1"

val kindProjector = compilerPlugin("org.typelevel" % "kind-projector" % "0.11.3" cross CrossVersion.full)

val resetAllAttrs = "org.scalamacros" %% "resetallattrs" % "1.0.0"

val munit = "org.scalameta" %% "munit" % "0.7.22"

val disciplineMunit = "org.typelevel" %% "discipline-munit" % "1.0.6"

ThisBuild / scalaVersion := "2.13.5"

lazy val root = (project in file("."))

.settings(

organization := "com.example",

name := "something",

libraryDependencies ++= Seq(

catsCore,

catsFree,

catsMtl,

simulacrum,

kindProjector,

resetAllAttrs,

catsLaws % Test,

munit % Test,

disciplineMunit % Test,

),

scalacOptions ++= Seq(

"-deprecation",

"-encoding", "UTF-8",

"-feature",

"-language:_"

)

)

You can then open the REPL using sbt 1.4.9:

$ sbt

> console

[info] Starting scala interpreter...

Welcome to Scala 2.13.5 (OpenJDK 64-Bit Server VM, Java 1.8.0_232).

Type in expressions for evaluation. Or try :help.

scala>

There’s also API docs generated for Cats.

Eq

LYAHFGG:

Eqis used for types that support equality testing. The functions its members implement are==and/=.

Cats’ equivalent for the Eq typeclass is also called Eq.

Eq was moved from non/algebra into cats-kernel subproject, and became part of Cats:

import cats._, cats.syntax.all._

1 === 1

// res0: Boolean = true

1 === "foo"

// error: type mismatch;

// found : String("foo")

// required: Int

// 1 === "foo"

// ^^^^^

(Some(1): Option[Int]) =!= (Some(2): Option[Int])

// res2: Boolean = true

Instead of the standard ==, Eq enables === and =!= syntax by declaring eqv method. The main difference is that === would fail compilation if you tried to compare Int and String.

In algebra, neqv is implemented based on eqv.

/**

* A type class used to determine equality between 2 instances of the same

* type. Any 2 instances `x` and `y` are equal if `eqv(x, y)` is `true`.

* Moreover, `eqv` should form an equivalence relation.

*/

trait Eq[@sp A] extends Any with Serializable { self =>

/**

* Returns `true` if `x` and `y` are equivalent, `false` otherwise.

*/

def eqv(x: A, y: A): Boolean

/**

* Returns `false` if `x` and `y` are equivalent, `true` otherwise.

*/

def neqv(x: A, y: A): Boolean = !eqv(x, y)

....

}

This is an example of polymorphism. Whatever equality means for the type A,

neqv is the opposite of it. It does not matter if it’s String, Int, or whatever.

Another way of looking at it is that given Eq[A], === is universally the opposite of =!=.

I’m a bit concerned that Eq seems to be using the terms “equal” and “equivalent”

interchangably. Equivalence relationship could include “having the same birthday”

whereas equality also requires substitution property.

Order

LYAHFGG:

Ordis for types that have an ordering.Ordcovers all the standard comparing functions such as>,<,>=and<=.

Cats’ equivalent for the Ord typeclass is Order.

// plain Scala

1 > 2.0

// res0: Boolean = false

import cats._, cats.syntax.all._

1 compare 2.0

// error: type mismatch;

// found : Double(2.0)

// required: Int

// 1.0 compare 2.0

// ^^^

import cats._, cats.syntax.all._

1.0 compare 2.0

// res2: Int = -1

1.0 max 2.0

// res3: Double = 2.0

Order enables compare syntax which returns Int: negative, zero, or positive.

It also enables min and max operators.

Similar to Eq, comparing Int and Double fails compilation.

PartialOrder

In addition to Order, Cats also defines PartialOrder.

import cats._, cats.syntax.all._

1 tryCompare 2

// res0: Option[Int] = Some(value = -1)

1.0 tryCompare Double.NaN

// res1: Option[Int] = Some(value = -1)

PartialOrder enables tryCompare syntax which returns Option[Int].

According to algebra, it’ll return None if operands are not comparable.

It’s returning Some(-1) when comparing 1.0 and Double.NaN, so I’m not sure when things are incomparable.

def lt[A: PartialOrder](a1: A, a2: A): Boolean = a1 <= a2

lt(1, 2)

// res2: Boolean = true

lt[Int](1, 2.0)

// error: type mismatch;

// found : Double(2.0)

// required: Int

// lt[Int](1, 2.0)

// ^^^

PartialOrder also enables >, >=, <, and <= operators,

but these are tricky to use because if you’re not careful

you could end up using the built-in comparison operators.

Show

LYAHFGG:

Members of

Showcan be presented as strings.

Cats’ equivalent for the Show typeclass is Show:

import cats._, cats.syntax.all._

3.show

// res0: String = "3"

"hello".show

// res1: String = "hello"

Here’s the typeclass contract:

@typeclass trait Show[T] {

def show(f: T): String

}

At first, it might seem silly to define Show because Scala

already has toString on Any.

Any also means anything would match the criteria, so you lose type safety.

The toString could be junk supplied by some parent class:

(new {}).toString

// res2: String = "repl.MdocSession1@891c355"

(new {}).show

// error: value show is not a member of AnyRef

// (new {}).show

// ^^^^^^^^^^^^

object Show provides two functions to create a Show instance:

object Show {

/** creates an instance of [[Show]] using the provided function */

def show[A](f: A => String): Show[A] = new Show[A] {

def show(a: A): String = f(a)

}

/** creates an instance of [[Show]] using object toString */

def fromToString[A]: Show[A] = new Show[A] {

def show(a: A): String = a.toString

}

implicit val catsContravariantForShow: Contravariant[Show] = new Contravariant[Show] {

def contramap[A, B](fa: Show[A])(f: B => A): Show[B] =

show[B](fa.show _ compose f)

}

}

Let’s try using them:

case class Person(name: String)

case class Car(model: String)

{

implicit val personShow = Show.show[Person](_.name)

Person("Alice").show

}

// res4: String = "Alice"

{

implicit val carShow = Show.fromToString[Car]

Car("CR-V")

}

// res5: Car = Car(model = "CR-V")

Read

LYAHFGG:

Readis sort of the opposite typeclass ofShow. Thereadfunction takes a string and returns a type which is a member ofRead.

I could not find Cats’ equivalent for this typeclass.

I find myself defining Read and its variant ReadJs time and time again.

Stringly typed programming is ugly.

At the same time, String is a robust data format to cross platform boundaries (e.g. JSON).

Also we humans know how to deal with them directly (e.g. command line options),

so it’s hard to get away from String parsing.

If we’re going to do it anyway, having Read makes it easier.

Enum

LYAHFGG:

Enummembers are sequentially ordered types — they can be enumerated. The main advantage of theEnumtypeclass is that we can use its types in list ranges. They also have defined successors and predecessors, which you can get with thesuccandpredfunctions.

I could not find Cats’ equivalent for this typeclass.

It’s not an Enum or range, but non/spire has an interesting data structure called Interval.

Check out Erik’s Intervals: Unifying Uncertainty, Ranges, and Loops talk from nescala 2015.

Numeric

LYAHFGG:

Numis a numeric typeclass. Its members have the property of being able to act like numbers.

I could not find Cats’ equivalent for this typeclass,

but spire defines Numeric. Cats doesn’t define Bounds either.

So far we’ve seen some of typeclasses that are not defined in Cats. This is not necessarily a bad thing because having a tight core is part of the design goal of Cats.

typeclasses 102

I am now going to skip over to Chapter 8 Making Our Own Types and Typeclasses (Chapter 7 if you have the book) since the chapters in between are mostly about Haskell syntax.

A traffic light datatype

data TrafficLight = Red | Yellow | Green

In Scala this would be:

import cats._, cats.syntax.all._

sealed trait TrafficLight

object TrafficLight {

case object Red extends TrafficLight

case object Yellow extends TrafficLight

case object Green extends TrafficLight

}

Now let’s define an instance for Eq.

implicit val trafficLightEq: Eq[TrafficLight] =

new Eq[TrafficLight] {

def eqv(a1: TrafficLight, a2: TrafficLight): Boolean = a1 == a2

}

// trafficLightEq: Eq[TrafficLight] = repl.MdocSession1@124d506d

Note: The latest algebra.Equal includes Equal.instance and Equal.fromUniversalEquals.

Can I use the Eq?

TrafficLight.Red === TrafficLight.Yellow

// error: value === is not a member of object repl.MdocSession.App.TrafficLight.Red

// TrafficLight.red === TrafficLight.yellow

// ^^^^^^^^^^^^^^^^^^^^

So apparently Eq[TrafficLight] doesn’t get picked up because Eq has nonvariant subtyping: Eq[A].

One way to workaround this issue is to define helper functions to cast them up to TrafficLight:

import cats._, cats.syntax.all._

sealed trait TrafficLight

object TrafficLight {

def red: TrafficLight = Red

def yellow: TrafficLight = Yellow

def green: TrafficLight = Green

case object Red extends TrafficLight

case object Yellow extends TrafficLight

case object Green extends TrafficLight

}

{

implicit val trafficLightEq: Eq[TrafficLight] =

new Eq[TrafficLight] {

def eqv(a1: TrafficLight, a2: TrafficLight): Boolean = a1 == a2

}

TrafficLight.red === TrafficLight.yellow

}

// res2: Boolean = false

It is a bit of boilerplate, but it works.

day 2

Yesterday we reviewed a few basic typeclasses from Cats like Eq by using Learn You a Haskell for Great Good as the guide.

Making our own typeclass with simulacrum

LYAHFGG:

In JavaScript and some other weakly typed languages, you can put almost anything inside an if expression. …. Even though strictly using

Boolfor boolean semantics works better in Haskell, let’s try and implement that JavaScript-ish behavior anyway. For fun!

The conventional steps of defining a modular typeclass in Scala used to look like:

- Define typeclass contract trait

Foo. - Define a companion object

Foowith a helper methodapplythat acts likeimplicitly, and a way of definingFooinstances typically from a function. - Define

FooOpsclass that defines unary or binary operators. - Define

FooSyntaxtrait that implicitly providesFooOpsfrom aFooinstance.

Frankly, these steps are mostly copy-paste boilerplate except for the first one.

Enter Michael Pilquist (@mpilquist)’s simulacrum.

simulacrum magically generates most of steps 2-4 just by putting @typeclass annotation.

By chance, Stew O’Connor (@stewoconnor/@stew)’s #294 got merged,

which refactors Cats to use it.

Yes-No typeclass

In any case, let’s see if we can make our own truthy value typeclass.

Note the @typeclass annotation:

scala> import simulacrum._

scala> :paste

@typeclass trait CanTruthy[A] { self =>

/** Return true, if `a` is truthy. */

def truthy(a: A): Boolean

}

object CanTruthy {

def fromTruthy[A](f: A => Boolean): CanTruthy[A] = new CanTruthy[A] {

def truthy(a: A): Boolean = f(a)

}

}

According to the README, the macro will generate all the operator enrichment stuff:

// This is the supposed generated code. You don't have to write it!

object CanTruthy {

def fromTruthy[A](f: A => Boolean): CanTruthy[A] = new CanTruthy[A] {

def truthy(a: A): Boolean = f(a)

}

def apply[A](implicit instance: CanTruthy[A]): CanTruthy[A] = instance

trait Ops[A] {

def typeClassInstance: CanTruthy[A]

def self: A

def truthy: A = typeClassInstance.truthy(self)

}

trait ToCanTruthyOps {

implicit def toCanTruthyOps[A](target: A)(implicit tc: CanTruthy[A]): Ops[A] = new Ops[A] {

val self = target

val typeClassInstance = tc

}

}

trait AllOps[A] extends Ops[A] {

def typeClassInstance: CanTruthy[A]

}

object ops {

implicit def toAllCanTruthyOps[A](target: A)(implicit tc: CanTruthy[A]): AllOps[A] = new AllOps[A] {

val self = target

val typeClassInstance = tc

}

}

}

To make sure it works, let’s define an instance for Int and use it. The eventual goal is to get 1.truthy to return true:

scala> implicit val intCanTruthy: CanTruthy[Int] = CanTruthy.fromTruthy({

case 0 => false

case _ => true

})

scala> import CanTruthy.ops._

scala> 10.truthy

It works. This is quite nifty.

One caveat is that this requires Macro Paradise plugin to compile. Once it’s compiled the user of CanTruthy can use it without Macro Paradise.

Symbolic operators

For CanTruthy the injected operator happened to be unary, and it matched the name of the function on the typeclass contract. simulacrum can also define operator with symbolic names using @op annotation:

scala> @typeclass trait CanAppend[A] {

@op("|+|") def append(a1: A, a2: A): A

}

scala> implicit val intCanAppend: CanAppend[Int] = new CanAppend[Int] {

def append(a1: Int, a2: Int): Int = a1 + a2

}

scala> import CanAppend.ops._

scala> 1 |+| 2

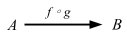

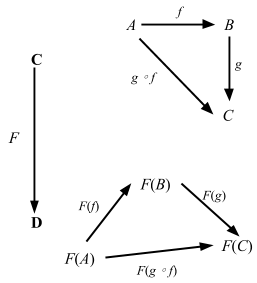

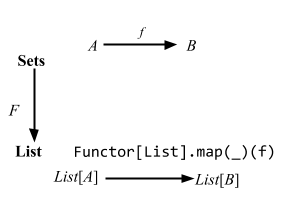

Functor

LYAHFGG:

And now, we’re going to take a look at the

Functortypeclass, which is basically for things that can be mapped over.

Like the book let’s look how it's implemented:

/**

* Functor.

*

* The name is short for "covariant functor".

*

* Must obey the laws defined in cats.laws.FunctorLaws.

*/

@typeclass trait Functor[F[_]] extends functor.Invariant[F] { self =>

def map[A, B](fa: F[A])(f: A => B): F[B]

....

}

Here’s how we can use this:

import cats._, cats.syntax.all._

Functor[List].map(List(1, 2, 3)) { _ + 1 }

// res0: List[Int] = List(2, 3, 4)

Let’s call the above usage the function syntax.

We now know that @typeclass annotation will automatically turn a map function into a map operator.

The fa part turns into the this of the method, and the second parameter list will now be

the parameter list of map operator:

// Supposed generated code

object Functor {

trait Ops[F[_], A] {

def typeClassInstance: Functor[F]

def self: F[A]

def map[B](f: A => B): F[B] = typeClassInstance.map(self)(f)

}

}

This looks almost like the map method on Scala collection library,

except this map doesn’t do the CanBuildFrom auto conversion.

Either as a functor

Cats defines a Functor instance for Either[A, B].

(Right(1): Either[String, Int]) map { _ + 1 }

// res1: Either[String, Int] = Right(value = 2)

(Left("boom!"): Either[String, Int]) map { _ + 1 }

// res2: Either[String, Int] = Left(value = "boom!")

Note that the above demonstration only works because Either[A, B] at the moment

does not implement its own map.

If I used List(1, 2, 3) it will call List’s implementation of map instead of

the Functor[List]’s map. Therefore, even though the operator syntax looks familiar,

we should either avoid using it unless you’re sure that standard library doesn’t implement the map

or you’re using it from a polymorphic function.

One workaround is to opt for the function syntax.

Function as a functor

Cats also defines a Functor instance for Function1.

{

val addOne: Int => Int = (x: Int) => x + 1

val h: Int => Int = addOne map {_ * 7}

h(3)

}

// res3: Int = 28

This is interesting. Basically map gives us a way to compose functions, except the order is in reverse from f compose g. Another way of looking at Function1 is that it’s an infinite map from the domain to the range. Now let’s skip the input and output stuff and go to Functors, Applicative Functors and Monoids.

How are functions functors? …

What does the type

fmap :: (a -> b) -> (r -> a) -> (r -> b)for this instance tell us? Well, we see that it takes a function fromatoband a function fromrtoaand returns a function fromrtob. Does this remind you of anything? Yes! Function composition!

Oh man, LYAHFGG came to the same conclusion as I did about the function composition. But wait…

ghci> fmap (*3) (+100) 1

303

ghci> (*3) . (+100) $ 1

303

In Haskell, the fmap seems to be working in the same order as f compose g. Let’s check in Scala using the same numbers:

{

(((_: Int) * 3) map {_ + 100}) (1)

}

// res4: Int = 103

Something is not right. Let’s compare the declaration of fmap and Cats’ map function:

fmap :: (a -> b) -> f a -> f b

and here’s Cats:

def map[A, B](fa: F[A])(f: A => B): F[B]

So the order is flipped. Here’s Paolo Giarrusso (@blaisorblade)’s explanation:

That’s a common Haskell-vs-Scala difference.

In Haskell, to help with point-free programming, the “data” argument usually comes last. For instance, I can write

map f . map g . map hand get a list transformer, because the argument order ismap f list. (Incidentally, map is an restriction of fmap to the List functor).In Scala instead, the “data” argument is usually the receiver. That’s often also important to help type inference, so defining map as a method on functions would not bring you very far: think the mess Scala type inference would make of

(x => x + 1) map List(1, 2, 3).

This seems to be the popular explanation.

Lifting a function

LYAHFGG:

[We can think of

fmapas] a function that takes a function and returns a new function that’s just like the old one, only it takes a functor as a parameter and returns a functor as the result. It takes ana -> bfunction and returns a functionf a -> f b. This is called lifting a function.

ghci> :t fmap (*2)

fmap (*2) :: (Num a, Functor f) => f a -> f a

ghci> :t fmap (replicate 3)

fmap (replicate 3) :: (Functor f) => f a -> f [a]

If the parameter order has been flipped, are we going to miss out on this lifting goodness?

Fortunately, Cats implements derived functions under the Functor typeclass:

@typeclass trait Functor[F[_]] extends functor.Invariant[F] { self =>

def map[A, B](fa: F[A])(f: A => B): F[B]

....

// derived methods

/**

* Lift a function f to operate on Functors

*/

def lift[A, B](f: A => B): F[A] => F[B] = map(_)(f)

/**

* Empty the fa of the values, preserving the structure

*/

def void[A](fa: F[A]): F[Unit] = map(fa)(_ => ())

/**

* Tuple the values in fa with the result of applying a function

* with the value

*/

def fproduct[A, B](fa: F[A])(f: A => B): F[(A, B)] = map(fa)(a => a -> f(a))

/**

* Replaces the `A` value in `F[A]` with the supplied value.

*/

def as[A, B](fa: F[A], b: B): F[B] = map(fa)(_ => b)

}

As you see, we have lift!

{

val lifted = Functor[List].lift {(_: Int) * 2}

lifted(List(1, 2, 3))

}

// res5: List[Int] = List(2, 4, 6)

We’ve just lifted the function {(_: Int) * 2} to List[Int] => List[Int]. Here the other derived functions using the operator syntax:

List(1, 2, 3).void

// res6: List[Unit] = List((), (), ())

List(1, 2, 3) fproduct {(_: Int) * 2}

// res7: List[(Int, Int)] = List((1, 2), (2, 4), (3, 6))

List(1, 2, 3) as "x"

// res8: List[String] = List("x", "x", "x")

Functor Laws

LYAHFGG:

In order for something to be a functor, it should satisfy some laws. All functors are expected to exhibit certain kinds of functor-like properties and behaviors. … The first functor law states that if we map the id function over a functor, the functor that we get back should be the same as the original functor.

We can check this for Either[A, B].

val x: Either[String, Int] = Right(1)

// x: Either[String, Int] = Right(value = 1)

assert { (x map identity) === x }

The second law says that composing two functions and then mapping the resulting function over a functor should be the same as first mapping one function over the functor and then mapping the other one.

In other words,

val f = {(_: Int) * 3}

// f: Int => Int = <function1>

val g = {(_: Int) + 1}

// g: Int => Int = <function1>

assert { (x map (f map g)) === (x map f map g) }

These are laws the implementer of the functors must abide, and not something the compiler can check for you.

Checking laws with Discipline

The compiler can’t check for the laws, but Cats ships with a FunctorLaws trait that describes this in code:

/**

* Laws that must be obeyed by any [[Functor]].

*/

trait FunctorLaws[F[_]] extends InvariantLaws[F] {

implicit override def F: Functor[F]

def covariantIdentity[A](fa: F[A]): IsEq[F[A]] =

fa.map(identity) <-> fa

def covariantComposition[A, B, C](fa: F[A], f: A => B, g: B => C): IsEq[F[C]] =

fa.map(f).map(g) <-> fa.map(f andThen g)

}

Checking laws from the REPL

This is based on a library called Discipline, which is a wrapper around ScalaCheck. We can run these tests from the REPL with ScalaCheck.

scala> import cats._, cats.syntax.all._

import cats._

import cats.syntax.all._

scala> import cats.laws.discipline.FunctorTests

import cats.laws.discipline.FunctorTests

scala> val rs = FunctorTests[Either[Int, *]].functor[Int, Int, Int]

val rs: cats.laws.discipline.FunctorTests[[?$0$]scala.util.Either[Int,?$0$]]#RuleSet = org.typelevel.discipline.Laws$DefaultRuleSet@2b1a2a1d

scala> import org.scalacheck.Test.Parameters

import org.scalacheck.Test.Parameters

scala> rs.all.check(Parameters.default)

+ functor.covariant composition: OK, passed 100 tests.

+ functor.covariant identity: OK, passed 100 tests.

+ functor.invariant composition: OK, passed 100 tests.

+ functor.invariant identity: OK, passed 100 tests.

rs.all returns org.scalacheck.Properties, which implements check method.

Checking laws with Discipline + MUnit

In addition to ScalaCheck, you can call these tests from ScalaTest, Specs2, or MUnit. An MUnit test to check the functor law for Either[Int, Int] looks like this:

package example

import cats._

import cats.laws.discipline.FunctorTests

class EitherTest extends munit.DisciplineSuite {

checkAll("Either[Int, Int]", FunctorTests[Either[Int, *]].functor[Int, Int, Int])

}

The Either[Int, *] is using non/kind-projector.

Running the test from sbt displays the following output:

sbt:herding-cats> Test/testOnly example.EitherTest

example.EitherTest:

+ Either[Int, Int]: functor.covariant composition 0.096s

+ Either[Int, Int]: functor.covariant identity 0.017s

+ Either[Int, Int]: functor.invariant composition 0.041s

+ Either[Int, Int]: functor.invariant identity 0.011s

[info] Passed: Total 4, Failed 0, Errors 0, Passed 4

Breaking the law

LYAHFGG:

Let’s take a look at a pathological example of a type constructor being an instance of the Functor typeclass but not really being a functor, because it doesn’t satisfy the laws.

Let’s try breaking the law.

package example

import cats._

sealed trait COption[+A]

case class CSome[A](counter: Int, a: A) extends COption[A]

case object CNone extends COption[Nothing]

object COption {

implicit def coptionEq[A]: Eq[COption[A]] = new Eq[COption[A]] {

def eqv(a1: COption[A], a2: COption[A]): Boolean = a1 == a2

}

implicit val coptionFunctor = new Functor[COption] {

def map[A, B](fa: COption[A])(f: A => B): COption[B] =

fa match {

case CNone => CNone

case CSome(c, a) => CSome(c + 1, f(a))

}

}

}

Here’s how we can use this:

import cats._, cats.syntax.all._

import example._

(CSome(0, "hi"): COption[String]) map {identity}

// res0: COption[String] = CSome(counter = 1, a = "hi")

This breaks the first law because the result of the identity function is not equal to the input.

To catch this we need to supply an “arbitrary” COption[A] implicitly:

package example

import cats._

import cats.laws.discipline.{ FunctorTests }

import org.scalacheck.{ Arbitrary, Gen }

class COptionTest extends munit.DisciplineSuite {

checkAll("COption[Int]", FunctorTests[COption].functor[Int, Int, Int])

implicit def coptionArbiterary[A](implicit arbA: Arbitrary[A]): Arbitrary[COption[A]] =

Arbitrary {

val arbSome = for {

i <- implicitly[Arbitrary[Int]].arbitrary

a <- arbA.arbitrary

} yield (CSome(i, a): COption[A])

val arbNone = Gen.const(CNone: COption[Nothing])

Gen.oneOf(arbSome, arbNone)

}

}

Here’s the output:

example.COptionTest:

failing seed for functor.covariant composition is 43LA3KHokN6KnEAzbkXi6IijQU91ran9-zsO2JeIyIP=

==> X example.COptionTest.COption[Int]: functor.covariant composition 0.058s munit.FailException: /Users/eed3si9n/work/herding-cats/src/test/scala/example/COptionTest.scala:8

7:class COptionTest extends munit.DisciplineSuite {

8: checkAll("COption[Int]", FunctorTests[COption].functor[Int, Int, Int])

9:

Failing seed: 43LA3KHokN6KnEAzbkXi6IijQU91ran9-zsO2JeIyIP=

You can reproduce this failure by adding the following override to your suite:

override val scalaCheckInitialSeed = "43LA3KHokN6KnEAzbkXi6IijQU91ran9-zsO2JeIyIP="

Falsified after 0 passed tests.

> Labels of failing property:

Expected: CSome(2,-1)

Received: CSome(3,-1)

> ARG_0: CSome(1,0)

> ARG_1: org.scalacheck.GenArities$$Lambda$36505/1702985322@62d7d97c

> ARG_2: org.scalacheck.GenArities$$Lambda$36505/1702985322@18bdc9d7

....

failing seed for functor.covariant identity is a4C-NCiCQEn0lU6F_TXdy5-IZ-XhMYDrC0vipJ3O_tG=

==> X example.COptionTest.COption[Int]: functor.covariant identity 0.003s munit.FailException: /Users/eed3si9n/work/herding-cats/src/test/scala/example/COptionTest.scala:8

7:class COptionTest extends munit.DisciplineSuite {

8: checkAll("COption[Int]", FunctorTests[COption].functor[Int, Int, Int])

9:

Failing seed: RhjRyflmRS-5CYveyf0uAFHuX6mWNm-Z98FVIs2aIVC=

You can reproduce this failure by adding the following override to your suite:

override val scalaCheckInitialSeed = "RhjRyflmRS-5CYveyf0uAFHuX6mWNm-Z98FVIs2aIVC="

Falsified after 1 passed tests.

> Labels of failing property:

Expected: CSome(-1486306630,-1498342842)

Received: CSome(-1486306629,-1498342842)

> ARG_0: CSome(-1486306630,-1498342842)

....

failing seed for functor.invariant composition is 9uQIZNNK_uZksfWg5pRb0VJUIgUtkv9vG9ckZ4UlRwD=

==> X example.COptionTest.COption[Int]: functor.invariant composition 0.005s munit.FailException: /Users/eed3si9n/work/herding-cats/src/test/scala/example/COptionTest.scala:8

7:class COptionTest extends munit.DisciplineSuite {

8: checkAll("COption[Int]", FunctorTests[COption].functor[Int, Int, Int])

9:

Failing seed: 9uQIZNNK_uZksfWg5pRb0VJUIgUtkv9vG9ckZ4UlRwD=

You can reproduce this failure by adding the following override to your suite:

override val scalaCheckInitialSeed = "9uQIZNNK_uZksfWg5pRb0VJUIgUtkv9vG9ckZ4UlRwD="

Falsified after 0 passed tests.

> Labels of failing property:

Expected: CSome(1,2147483647)

Received: CSome(2,2147483647)

> ARG_0: CSome(0,1095768235)

> ARG_1: org.scalacheck.GenArities$$Lambda$36505/1702985322@431263ab

> ARG_2: org.scalacheck.GenArities$$Lambda$36505/1702985322@5afe6566

> ARG_3: org.scalacheck.GenArities$$Lambda$36505/1702985322@ca0deda

> ARG_4: org.scalacheck.GenArities$$Lambda$36505/1702985322@1d7dde37

....

failing seed for functor.invariant identity is RcktTeI0rbpoUfuI3FHdvZtVGXGMoAjB6JkNBcTNTVK=

==> X example.COptionTest.COption[Int]: functor.invariant identity 0.002s munit.FailException: /Users/eed3si9n/work/herding-cats/src/test/scala/example/COptionTest.scala:8

7:class COptionTest extends munit.DisciplineSuite {

8: checkAll("COption[Int]", FunctorTests[COption].functor[Int, Int, Int])

9:

Failing seed: RcktTeI0rbpoUfuI3FHdvZtVGXGMoAjB6JkNBcTNTVK=

You can reproduce this failure by adding the following override to your suite:

override val scalaCheckInitialSeed = "RcktTeI0rbpoUfuI3FHdvZtVGXGMoAjB6JkNBcTNTVK="

Falsified after 0 passed tests.

> Labels of failing property:

Expected: CSome(2147483647,1054398067)

Received: CSome(-2147483648,1054398067)

> ARG_0: CSome(2147483647,1054398067)

....

[error] Failed: Total 4, Failed 4, Errors 0, Passed 0

[error] Failed tests:

[error] example.COptionTest

[error] (Test / testOnly) sbt.TestsFailedException: Tests unsuccessful

The tests failed as expected.

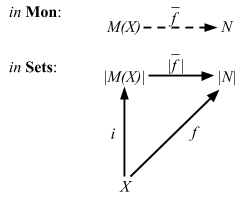

Import guide

Cats makes heavy use of implicits. Both as a user and an extender of the library, it will be useful to have general idea on where things are coming from. If you’re just starting out with Cats, you can use the following the imports and skip this page, assuming you’re using Cats 2.2.0 and above:

scala> import cats._, cats.data._, cats.syntax.all._

Prior to Cats 2.2.0 it was:

scala> import cats._, cats.data._, cats.implicits._

Implicits review

Let’s quickly review Scala 2’s imports and implicits! In Scala, imports are used for two purposes:

- To include names of values and types into the scope.

- To include implicits into the scope.

Given some type A, implicit is a mechanism to ask the compiler for a specific (term) value for the type. This can be used for different purposes, for Cats, the 2 main usages are:

- instances; to provide typeclass instances.

- syntax; to inject methods and operators. (method extension)

Implicits are selected in the following precedence:

- Values and converters accessible without prefix via local declaration, imports, outer scope, inheritance, and current package object. Inner scope can shadow values when they are named the same.

- Implicit scope. Values and converters declared in companion objects and package object of the type, its parts, or super types.

import cats._

Now let’s see what gets imported with import cats._.

First, the names. Typeclasses like Show[A] and Functor[F[_]] are implemented as trait, and are defined under the cats package. So instead of writing cats.Show[A] we can write Show[A].

Next, also the names, but type aliases. cats’s package object declares type aliases like Eq[A] and ~>[F[_], G[_]]. Again, these can also be accessed as cats.Eq[A] if you want.

Finally, catsInstancesForId is defined as typeclass instance of Id[A] for Traverse[F[_]], Monad[F[_]] etc, but it’s not relevant. By virtue of declaring an instance within its package object it will be available, so importing doesn’t add much. Let’s check this:

scala> cats.Functor[cats.Id]

res0: cats.Functor[cats.Id] = cats.package$$anon$1@3c201c09

No import needed, which is a good thing. So, the merit of import cats._ is for convenience, and it’s optional.

Implicit scope

In March 2020, Travis Brown’s #3043 was merged and was released as Cats 2.2.0. In short, this change added the typeclass instances of standard library types into the companion object of the typeclasses.

This reduces the need for importing things into the lexical scope, which has the benefit of simplicity and apparently less work for the compiler. For instance, with Cats 2.4.x the following works without any imports:

scala> cats.Functor[Option]

val res1: cats.Functor[Option] = cats.instances.OptionInstances$$anon$1@56a2a3bf

See Travis’s Implicit scope and Cats for more details.

import cats.data._

Next let’s see what gets imported with import cats.data._.

First, more names. There are custom datatype defined under the cats.data package such as Validated[+E, +A].

Next, the type aliases. The cats.data package object defines type aliases such as Reader[A, B], which is treated as a specialization of ReaderT transformer. We can still write this as cats.data.Reader[A, B].

import cats.implicits._

What then is import cats.implicits._ doing? Here’s the definition of implicits object:

package cats

object implicits extends syntax.AllSyntax with instances.AllInstances

This is quite a nice way of organizing the imports. implicits object itself doesn’t define anythig and it just mixes in the traits. We are going to look at each traits in detail, but they can also be imported a la carte, dim sum style. Back to the full course.

cats.instances.AllInstances

Thus far, I have been intentionally conflating the concept of typeclass instances and method injection (aka enrich my library). But the fact that (Int, +) forms a Monoid and that Monoid introduces |+| operator are two different things.

One of the interesting design of Cats is that it rigorously separates the two concepts into “instance” and “syntax.” Even if it makes logical sense to some users, the choice of symbolic operators can often be a point of contention with any libraries. Libraries and tools such as sbt, dispatch, and specs introduce its own DSL, and their effectiveness have been hotly debated.

AllInstances is a trait that mixes in all the typeclass instances for built-in datatypes such as Either[A, B] and Option[A].

package cats

package instances

trait AllInstances

extends FunctionInstances

with StringInstances

with EitherInstances

with ListInstances

with OptionInstances

with SetInstances

with StreamInstances

with VectorInstances

with AnyValInstances

with MapInstances

with BigIntInstances

with BigDecimalInstances

with FutureInstances

with TryInstances

with TupleInstances

with UUIDInstances

with SymbolInstances

cats.syntax.AllSyntax

AllSyntax is a trait that mixes in all of the operators available in Cats.

package cats

package syntax

trait AllSyntax

extends ApplicativeSyntax

with ApplicativeErrorSyntax

with ApplySyntax

with BifunctorSyntax

with BifoldableSyntax

with BitraverseSyntax

with CartesianSyntax

with CoflatMapSyntax

with ComonadSyntax

with ComposeSyntax

with ContravariantSyntax

with CoproductSyntax

with EitherSyntax

with EqSyntax

....

a la carte style

Or, I’d like to call dim sum style, where they bring in a cart load of chinese dishes and you pick what you want.

If for whatever reason if you do not wish to import the entire cats.implicits._, you can pick and choose.

typeclass instances

As I mentioned above, after Cats 2.2.0, you typically don’t have to do anything to get the typeclass instances.

cats.Monad[Option].pure(0)

// res0: Option[Int] = Some(value = 0)

If you want to import typeclass instances for Option for some reason:

{

import cats.instances.option._

cats.Monad[Option].pure(0)

}

// res1: Option[Int] = Some(value = 0)

If you just want all instances, here’s how to load them all:

{

import cats.instances.all._

cats.Monoid[Int].empty

}

// res2: Int = 0

Because we have not injected any operators, you would have to work more with helper functions and functions under typeclass instances, which could be exactly what you want.

Cats typeclass syntax

Typeclass syntax are broken down by the typeclass. Here’s how to get injected methods and operators for Eqs:

{

import cats.syntax.eq._

1 === 1

}

// res3: Boolean = true

Cats datatype syntax

Cats datatype syntax like Writer are also available under cats.syntax package:

{

import cats.syntax.writer._

1.tell

}

// res4: cats.data.package.Writer[Int, Unit] = WriterT(run = (1, ()))

standard datatype syntax

Standard datatype syntax are broken down by the datatypes. Here’s how to get injected methods and functions for Option:

{

import cats.syntax.option._

1.some

}

// res5: Option[Int] = Some(value = 1)

all syntax

Here’s how to load all syntax and all instances.

{

import cats.syntax.all._

import cats.instances.all._

1.some

}

// res6: Option[Int] = Some(value = 1)

This is the same as importing cats.implicits._.

Again, if you are at all confused by this, just stick with the following first:

scala> import cats._, cats.data._, cats.syntax.all._

day 3

Yesterday we started with defining our own typeclasses using simulacrum, and ended with checking for functor laws using Discipline.

Kinds and some type-foo

Learn You a Haskell For Great Good says:

Types are little labels that values carry so that we can reason about the values. But types have their own little labels, called kinds. A kind is more or less the type of a type. … What are kinds and what are they good for? Well, let’s examine the kind of a type by using the :k command in GHCI.

Scala 2.10 didn’t have a :k command, so I wrote kind.scala.

Thanks to George Leontiev (@folone) and others, :kind command is now part of Scala 2.11 (scala/scala#2340). Let’s try using it:

scala> :k Int

scala.Int's kind is A

scala> :k -v Int

scala.Int's kind is A

*

This is a proper type.

Int and every other types that you can make a value out of are called a proper type and denoted with a * symbol (read “type”). This is analogous to the value 1 at value-level. Using Scala’s type variable notation this could be written as A.

scala> :k -v Option

scala.Option's kind is F[+A]

* -(+)-> *

This is a type constructor: a 1st-order-kinded type.

scala> :k -v Either

scala.util.Either's kind is F[+A1,+A2]

* -(+)-> * -(+)-> *

This is a type constructor: a 1st-order-kinded type.

These are normally called type constructors. Another way of looking at it is that it’s one step removed from a proper type. So we can call it a first-order-kinded type. This is analogous to a first-order value (_: Int) + 3, which we would normally call a function at the value level.

The curried notation uses arrows like * -> * and * -> * -> *. Note, Option[Int] is *; Option is * -> *. Using Scala’s type variable notation they could be written as F[+A] and F[+A1,+A2].

scala> :k -v Eq

algebra.Eq's kind is F[A]

* -> *

This is a type constructor: a 1st-order-kinded type.

Scala encodes (or complects) the notion of typeclasses using type constructors.

When looking at this, think of it as Eq is a typeclass for A, a proper type.

This should make sense because you would pass Int into Eq.

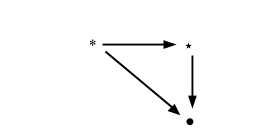

scala> :k -v Functor

cats.Functor's kind is X[F[A]]

(* -> *) -> *

This is a type constructor that takes type constructor(s): a higher-kinded type.

Again, Scala encodes typeclasses using type constructors,

so when looking at this, think of it as Functor is a typeclass for F[A], a type constructor.

This should also make sense because you would pass List into Functor.

In other words, this is a type constructor that accepts another type constructor.

This is analogous to a higher-order function, and thus called higher-kinded type.

These are denoted as (* -> *) -> *. Using Scala’s type variable notation this could be written as X[F[A]].

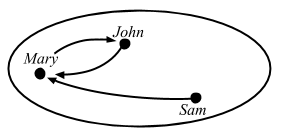

forms-a vs is-a

The terminology around typeclasses tends to get jumbled up.

For example, the pair (Int, +) forms a typeclass called monoid.

Colloquially, we say things like “is X a monoid?” to mean “can X form a monoid under some operation?”

An example of this is Either[A, B], which we implied “is-a” functor yesterday.

This is not completely accurate because, even though it might not be useful, we could have defined another left-biased functor.

Semigroupal

Functors, Applicative Functors and Monoids:

So far, when we were mapping functions over functors, we usually mapped functions that take only one parameter. But what happens when we map a function like

*, which takes two parameters, over a functor?

import cats._

{

val hs = Functor[List].map(List(1, 2, 3, 4)) ({(_: Int) * (_:Int)}.curried)

Functor[List].map(hs) {_(9)}

}

// res0: List[Int] = List(9, 18, 27, 36)

LYAHFGG:

But what if we have a functor value of

Just (3 *)and a functor value ofJust 5, and we want to take out the function fromJust(3 *)and map it overJust 5?Meet the

Applicativetypeclass. It lies in theControl.Applicativemodule and it defines two methods,pureand<*>.

Cats splits this into Semigroupal, Apply, and Applicative. Here’s the contract for Cartesian:

/**

* [[Semigroupal]] captures the idea of composing independent effectful values.

* It is of particular interest when taken together with [[Functor]] - where [[Functor]]

* captures the idea of applying a unary pure function to an effectful value,

* calling `product` with `map` allows one to apply a function of arbitrary arity to multiple

* independent effectful values.

*

* That same idea is also manifested in the form of [[Apply]], and indeed [[Apply]] extends both

* [[Semigroupal]] and [[Functor]] to illustrate this.

*/

@typeclass trait Semigroupal[F[_]] {

def product[A, B](fa: F[A], fb: F[B]): F[(A, B)]

}

Semigroupal defines product function, which produces a pair of (A, B) wrapped in effect F[_] out of F[A] and F[B].

Cartesian law

Cartesian has a single law called associativity:

trait CartesianLaws[F[_]] {

implicit def F: Cartesian[F]

def cartesianAssociativity[A, B, C](fa: F[A], fb: F[B], fc: F[C]): (F[(A, (B, C))], F[((A, B), C)]) =

(F.product(fa, F.product(fb, fc)), F.product(F.product(fa, fb), fc))

}

Apply

Functors, Applicative Functors and Monoids:

So far, when we were mapping functions over functors, we usually mapped functions that take only one parameter. But what happens when we map a function like

*, which takes two parameters, over a functor?

import cats._, cats.syntax.all._

{

val hs = Functor[List].map(List(1, 2, 3, 4)) ({(_: Int) * (_:Int)}.curried)

Functor[List].map(hs) {_(9)}

}

// res0: List[Int] = List(9, 18, 27, 36)

LYAHFGG:

But what if we have a functor value of

Just (3 *)and a functor value ofJust 5, and we want to take out the function fromJust(3 *)and map it overJust 5?Meet the

Applicativetypeclass. It lies in theControl.Applicativemodule and it defines two methods,pureand<*>.

Cats splits Applicative into Cartesian, Apply, and Applicative. Here’s the contract for Apply:

/**

* Weaker version of Applicative[F]; has apply but not pure.

*

* Must obey the laws defined in cats.laws.ApplyLaws.

*/

@typeclass(excludeParents = List("ApplyArityFunctions"))

trait Apply[F[_]] extends Functor[F] with Cartesian[F] with ApplyArityFunctions[F] { self =>

/**

* Given a value and a function in the Apply context, applies the

* function to the value.

*/

def ap[A, B](ff: F[A => B])(fa: F[A]): F[B]

....

}

Note that Apply extends Functor, Cartesian, and ApplyArityFunctions.

The <*> function is called ap in Cats’ Apply. (This was originally called apply, but was renamed to ap. +1)

LYAHFGG:

You can think of

<*>as a sort of a beefed-upfmap. Whereasfmaptakes a function and a functor and applies the function inside the functor value,<*>takes a functor that has a function in it and another functor and extracts that function from the first functor and then maps it over the second one.

The Applicative Style

LYAHFGG:

With the

Applicativetype class, we can chain the use of the<*>function, thus enabling us to seamlessly operate on several applicative values instead of just one.

Here’s an example in Haskell:

ghci> pure (-) <*> Just 3 <*> Just 5

Just (-2)

Cats comes with the apply syntax.

(3.some, 5.some) mapN { _ - _ }

// res1: Option[Int] = Some(value = -2)

(none[Int], 5.some) mapN { _ - _ }

// res2: Option[Int] = None

(3.some, none[Int]) mapN { _ - _ }

// res3: Option[Int] = None

This shows that Option forms Cartesian.

List as a Apply

LYAHFGG:

Lists (actually the list type constructor,

[]) are applicative functors. What a surprise!

Let’s see if we can use the apply sytax:

(List("ha", "heh", "hmm"), List("?", "!", ".")) mapN {_ + _}

// res4: List[String] = List(

// "ha?",

// "ha!",

// "ha.",

// "heh?",

// "heh!",

// "heh.",

// "hmm?",

// "hmm!",

// "hmm."

// )

*> and <* operators

Apply enables two operators, <* and *>, which are special cases of Apply[F].map2。

The definition looks simple enough, but the effect is cool:

1.some <* 2.some

// res5: Option[Int] = Some(value = 1)

none[Int] <* 2.some

// res6: Option[Int] = None

1.some *> 2.some

// res7: Option[Int] = Some(value = 2)

none[Int] *> 2.some

// res8: Option[Int] = None

If either side fails, we get None.

Option syntax

Before we move on, let’s look at the syntax that Cats adds to create an Option value.

9.some

// res9: Option[Int] = Some(value = 9)

none[Int]

// res10: Option[Int] = None

We can write (Some(9): Option[Int]) as 9.some.

Option as an Apply

Here’s how we can use it with Apply[Option].ap:

import cats._, cats.syntax.all._

Apply[Option].ap({{(_: Int) + 3}.some })(9.some)

// res12: Option[Int] = Some(value = 12)

Apply[Option].ap({{(_: Int) + 3}.some })(10.some)

// res13: Option[Int] = Some(value = 13)

Apply[Option].ap({{(_: String) + "hahah"}.some })(none[String])

// res14: Option[String] = None

Apply[Option].ap({ none[String => String] })("woot".some)

// res15: Option[String] = None

If either side fails, we get None.

If you remember Making our own typeclass with simulacrum from yesterday, simulacrum will automatically transpose the function defined on the typeclass contract into an operator, magically.

({(_: Int) + 3}.some) ap 9.some

// res16: Option[Int] = Some(value = 12)

({(_: Int) + 3}.some) ap 10.some

// res17: Option[Int] = Some(value = 13)

({(_: String) + "hahah"}.some) ap none[String]

// res18: Option[String] = None

(none[String => String]) ap "woot".some

// res19: Option[String] = None

Useful functions for Apply

LYAHFGG:

Control.Applicativedefines a function that’s calledliftA2, which has a type of

liftA2 :: (Applicative f) => (a -> b -> c) -> f a -> f b -> f c .

Remember parameters are flipped around in Scala.

What we have is a function that takes F[B] and F[A], then a function (A, B) => C.

This is called map2 on Apply.

@typeclass(excludeParents = List("ApplyArityFunctions"))

trait Apply[F[_]] extends Functor[F] with Cartesian[F] with ApplyArityFunctions[F] { self =>

def ap[A, B](ff: F[A => B])(fa: F[A]): F[B]

def productR[A, B](fa: F[A])(fb: F[B]): F[B] =

map2(fa, fb)((_, b) => b)

def productL[A, B](fa: F[A])(fb: F[B]): F[A] =

map2(fa, fb)((a, _) => a)

override def product[A, B](fa: F[A], fb: F[B]): F[(A, B)] =

ap(map(fa)(a => (b: B) => (a, b)))(fb)

/** Alias for [[ap]]. */

@inline final def <*>[A, B](ff: F[A => B])(fa: F[A]): F[B] =

ap(ff)(fa)

/** Alias for [[productR]]. */

@inline final def *>[A, B](fa: F[A])(fb: F[B]): F[B] =

productR(fa)(fb)

/** Alias for [[productL]]. */

@inline final def <*[A, B](fa: F[A])(fb: F[B]): F[A] =

productL(fa)(fb)

/**

* ap2 is a binary version of ap, defined in terms of ap.

*/

def ap2[A, B, Z](ff: F[(A, B) => Z])(fa: F[A], fb: F[B]): F[Z] =

map(product(fa, product(fb, ff))) { case (a, (b, f)) => f(a, b) }

def map2[A, B, Z](fa: F[A], fb: F[B])(f: (A, B) => Z): F[Z] =

map(product(fa, fb))(f.tupled)

def map2Eval[A, B, Z](fa: F[A], fb: Eval[F[B]])(f: (A, B) => Z): Eval[F[Z]] =

fb.map(fb => map2(fa, fb)(f))

....

}

For binary operators, map2 can be used to hide the applicative style.

Here we can write the same thing in two different ways:

(3.some, List(4).some) mapN { _ :: _ }

// res20: Option[List[Int]] = Some(value = List(3, 4))

Apply[Option].map2(3.some, List(4).some) { _ :: _ }

// res21: Option[List[Int]] = Some(value = List(3, 4))

The results match up.

The 2-parameter version of Apply[F].ap is called Apply[F].ap2:

Apply[Option].ap2({{ (_: Int) :: (_: List[Int]) }.some })(3.some, List(4).some)

// res22: Option[List[Int]] = Some(value = List(3, 4))

There’s a special case of map2 called tuple2, which works like this:

Apply[Option].tuple2(1.some, 2.some)

// res23: Option[(Int, Int)] = Some(value = (1, 2))

Apply[Option].tuple2(1.some, none[Int])

// res24: Option[(Int, Int)] = None

If you are wondering what happens when you have a function with more than two

parameters, note that Apply[F[_]] extends ApplyArityFunctions[F].

This is auto-generated code that defines ap3, map3, tuple3, … up to

ap22, map22, tuple22.

Apply law

Apply has a single law called composition:

trait ApplyLaws[F[_]] extends FunctorLaws[F] {

implicit override def F: Apply[F]

def applyComposition[A, B, C](fa: F[A], fab: F[A => B], fbc: F[B => C]): IsEq[F[C]] = {

val compose: (B => C) => (A => B) => (A => C) = _.compose

fa.ap(fab).ap(fbc) <-> fa.ap(fab.ap(fbc.map(compose)))

}

}

Applicative

Note: If you jumped to this page because you’re interested in applicative functors, you should definitely read Semigroupal and Apply first.

Functors, Applicative Functors and Monoids:

Meet the

Applicativetypeclass. It lies in theControl.Applicativemodule and it defines two methods,pureand<*>.

Let’s see Cats’ Applicative:

@typeclass trait Applicative[F[_]] extends Apply[F] { self =>

/**

* `pure` lifts any value into the Applicative Functor

*

* Applicative[Option].pure(10) = Some(10)

*/

def pure[A](x: A): F[A]

....

}

It’s an extension of Apply with pure.

LYAHFGG:

pureshould take a value of any type and return an applicative value with that value inside it. … A better way of thinking aboutpurewould be to say that it takes a value and puts it in some sort of default (or pure) context—a minimal context that still yields that value.

It seems like it’s basically a constructor that takes value A and returns F[A].

import cats._, cats.syntax.all._

Applicative[List].pure(1)

// res0: List[Int] = List(1)

Applicative[Option].pure(1)

// res1: Option[Int] = Some(value = 1)

This actually comes in handy using Apply[F].ap so we can avoid calling {{...}.some}.

{

val F = Applicative[Option]

F.ap({ F.pure((_: Int) + 3) })(F.pure(9))

}

// res2: Option[Int] = Some(value = 12)

We’ve abstracted Option away from the code.

Useful functions for Applicative

LYAHFGG:

Let’s try implementing a function that takes a list of applicatives and returns an applicative that has a list as its result value. We’ll call it

sequenceA.

sequenceA :: (Applicative f) => [f a] -> f [a]

sequenceA [] = pure []

sequenceA (x:xs) = (:) <$> x <*> sequenceA xs

Let’s try implementing this with Cats!

def sequenceA[F[_]: Applicative, A](list: List[F[A]]): F[List[A]] = list match {

case Nil => Applicative[F].pure(Nil: List[A])

case x :: xs => (x, sequenceA(xs)) mapN {_ :: _}

}

Let’s test it:

sequenceA(List(1.some, 2.some))

// res3: Option[List[Int]] = Some(value = List(1, 2))

sequenceA(List(3.some, none[Int], 1.some))

// res4: Option[List[Int]] = None

sequenceA(List(List(1, 2, 3), List(4, 5, 6)))

// res5: List[List[Int]] = List(

// List(1, 4),

// List(1, 5),

// List(1, 6),

// List(2, 4),

// List(2, 5),

// List(2, 6),

// List(3, 4),

// List(3, 5),

// List(3, 6)

// )

We got the right answers. What’s interesting here is that we did end up needing

Applicative after all, and sequenceA is generic in a typeclassy way.

Using

sequenceAis useful when we have a list of functions and we want to feed the same input to all of them and then view the list of results.

For Function1 with Int fixed example, we need some type annotation:

{

val f = sequenceA[Function1[Int, *], Int](List((_: Int) + 3, (_: Int) + 2, (_: Int) + 1))

f(3)

}

// res6: List[Int] = List(6, 5, 4)

Applicative Laws

Here are the laws for Applicative:

- identity:

pure id <*> v = v - homomorphism:

pure f <*> pure x = pure (f x) - interchange:

u <*> pure y = pure ($ y) <*> u

Cats defines another law

def applicativeMap[A, B](fa: F[A], f: A => B): IsEq[F[B]] =

fa.map(f) <-> fa.ap(F.pure(f))

This seem to say that if you combine F.ap and F.pure, you should get the same effect as F.map.

It took us a while, but I am glad we got this far. We’ll pick it up from here later.

day 4

Yesterday we reviewed kinds and types, explored Apply, applicative style, and ended with sequenceA.

Let’s move on to Semigroup and Monoid today.

Semigroup

If you have the book Learn You a Haskell for Great Good you get to start a new chapter: “Monoids.” For the website, it’s still Functors, Applicative Functors and Monoids.

First, it seems like Cats is missing newtype/tagged type facility.

We’ll implement our own later.

Haskell’s Monoid is split into Semigroup and Monoid in Cats. They are also type aliases of algebra.Semigroup and algebra.Monoid. As with Apply and Applicative, Semigroup is a weaker version of Monoid. If you can solve the same problem, weaker is cooler because you’re making fewer assumptions.

LYAHFGG:

It doesn’t matter if we do

(3 * 4) * 5or3 * (4 * 5). Either way, the result is60. The same goes for++. …We call this property associativity.

*is associative, and so is++, but-, for example, is not.

Let’s check this:

import cats._, cats.syntax.all._

assert { (3 * 2) * (8 * 5) === 3 * (2 * (8 * 5)) }

assert { List("la") ++ (List("di") ++ List("da")) === (List("la") ++ List("di")) ++ List("da") }

No error means, they are equal.

The Semigroup typeclass

Here’s the typeclass contract for algebra.Semigroup.

/**

* A semigroup is any set `A` with an associative operation (`combine`).

*/

trait Semigroup[@sp(Int, Long, Float, Double) A] extends Any with Serializable {

/**

* Associative operation taking which combines two values.

*/

def combine(x: A, y: A): A

....

}

This enables combine operator and its symbolic alias |+|. Let’s try using this.

List(1, 2, 3) |+| List(4, 5, 6)

// res2: List[Int] = List(1, 2, 3, 4, 5, 6)

"one" |+| "two"

// res3: String = "onetwo"

The Semigroup Laws

Associativity is the only law for Semigroup.

- associativity

(x |+| y) |+| z = x |+| (y |+| z)

Here’s how we can check the Semigroup laws from the REPL. Review Checking laws with discipline for the details:

scala> import cats._, cats.data._, cats.implicits._

import cats._

import cats.data._

import cats.implicits._

scala> import cats.kernel.laws.GroupLaws

import cats.kernel.laws.GroupLaws

scala> val rs1 = GroupLaws[Int].semigroup(Semigroup[Int])

rs1: cats.kernel.laws.GroupLaws[Int]#GroupProperties = cats.kernel.laws.GroupLaws$GroupProperties@5a077d1d

scala> rs1.all.check

+ semigroup.associativity: OK, passed 100 tests.

+ semigroup.combineN(a, 1) == a: OK, passed 100 tests.

+ semigroup.combineN(a, 2) == a |+| a: OK, passed 100 tests.

+ semigroup.serializable: OK, proved property.

Lists are Semigroups

List(1, 2, 3) |+| List(4, 5, 6)

// res4: List[Int] = List(1, 2, 3, 4, 5, 6)

Product and Sum

For Int a semigroup can be formed under both + and *.

Instead of tagged types, cats provides only the instance additive.

Trying to use operator syntax here is tricky.

def doSomething[A: Semigroup](a1: A, a2: A): A =

a1 |+| a2

doSomething(3, 5)(Semigroup[Int])

// res5: Int = 8

I might as well stick to function syntax:

Semigroup[Int].combine(3, 5)

// res6: Int = 8

Monoid

LYAHFGG:

It seems that both

*together with1and++along with[]share some common properties:

- The function takes two parameters.

- The parameters and the returned value have the same type.

- There exists such a value that doesn’t change other values when used with the binary function.

Let’s check it out in Scala:

4 * 1

// res0: Int = 4

1 * 9

// res1: Int = 9

List(1, 2, 3) ++ Nil

// res2: List[Int] = List(1, 2, 3)

Nil ++ List(0.5, 2.5)

// res3: List[Double] = List(0.5, 2.5)

Looks right.

Monoid typeclass

Here’s the typeclass contract of algebra.Monoid:

/**

* A monoid is a semigroup with an identity. A monoid is a specialization of a

* semigroup, so its operation must be associative. Additionally,

* `combine(x, empty) == combine(empty, x) == x`. For example, if we have `Monoid[String]`,

* with `combine` as string concatenation, then `empty = ""`.

*/

trait Monoid[@sp(Int, Long, Float, Double) A] extends Any with Semigroup[A] {

/**

* Return the identity element for this monoid.

*/

def empty: A

...

}

Monoid laws

In addition to the semigroup law, monoid must satify two more laws:

- associativity

(x |+| y) |+| z = x |+| (y |+| z) - left identity

Monoid[A].empty |+| x = x - right identity

x |+| Monoid[A].empty = x

Here’s how we can check monoid laws from the REPL:

scala> import cats._, cats.syntax.all._

import cats._

import cats.syntax.all._

scala> import cats.kernel.laws.discipline.MonoidTests

import cats.kernel.laws.discipline.MonoidTests

scala> import org.scalacheck.Test.Parameters

import org.scalacheck.Test.Parameters

scala> val rs1 = MonoidTests[Int].monoid

val rs1: cats.kernel.laws.discipline.MonoidTests[Int]#RuleSet = org.typelevel.discipline.Laws$DefaultRuleSet@108684fb

scala> rs1.all.check(Parameters.default)

+ monoid.associative: OK, passed 100 tests.

+ monoid.collect0: OK, passed 100 tests.

+ monoid.combine all: OK, passed 100 tests.

+ monoid.combineAllOption: OK, passed 100 tests.

+ monoid.intercalateCombineAllOption: OK, passed 100 tests.

+ monoid.intercalateIntercalates: OK, passed 100 tests.

+ monoid.intercalateRepeat1: OK, passed 100 tests.

+ monoid.intercalateRepeat2: OK, passed 100 tests.

+ monoid.is id: OK, passed 100 tests.

+ monoid.left identity: OK, passed 100 tests.

+ monoid.repeat0: OK, passed 100 tests.

+ monoid.repeat1: OK, passed 100 tests.

+ monoid.repeat2: OK, passed 100 tests.

+ monoid.reverseCombineAllOption: OK, passed 100 tests.

+ monoid.reverseRepeat1: OK, passed 100 tests.

+ monoid.reverseRepeat2: OK, passed 100 tests.

+ monoid.reverseReverses: OK, passed 100 tests.

+ monoid.right identity: OK, passed 100 tests.

Here’s the MUnit test of the above:

package example

import cats._

import cats.kernel.laws.discipline.MonoidTests

class IntTest extends munit.DisciplineSuite {

checkAll("Int", MonoidTests[Int].monoid)

}

Value classes

LYAHFGG:

The newtype keyword in Haskell is made exactly for these cases when we want to just take one type and wrap it in something to present it as another type.

Cats does not ship with a tagged-type facility, but Scala now has value classes. This will remain unboxed under certain conditions, so it should work for simple examples.

class Wrapper(val unwrap: Int) extends AnyVal

Disjunction and Conjunction

LYAHFGG:

Another type which can act like a monoid in two distinct but equally valid ways is

Bool. The first way is to have the or function||act as the binary function along withFalseas the identity value. … The other way forBoolto be an instance ofMonoidis to kind of do the opposite: have&&be the binary function and then makeTruethe identity value.

Cats does not provide this, but we can implement it ourselves.

import cats._, cats.syntax.all._

// `class Disjunction(val unwrap: Boolean) extends AnyVal` doesn't work on mdoc

class Disjunction(val unwrap: Boolean)

object Disjunction {

@inline def apply(b: Boolean): Disjunction = new Disjunction(b)

implicit val disjunctionMonoid: Monoid[Disjunction] = new Monoid[Disjunction] {

def combine(a1: Disjunction, a2: Disjunction): Disjunction =

Disjunction(a1.unwrap || a2.unwrap)

def empty: Disjunction = Disjunction(false)

}

implicit val disjunctionEq: Eq[Disjunction] = new Eq[Disjunction] {

def eqv(a1: Disjunction, a2: Disjunction): Boolean =

a1.unwrap == a2.unwrap

}

}

val x1 = Disjunction(true) |+| Disjunction(false)

// x1: Disjunction = repl.MdocSessionDisjunction@564bc78f

x1.unwrap

// res4: Boolean = true

val x2 = Monoid[Disjunction].empty |+| Disjunction(true)

// x2: Disjunction = repl.MdocSessionDisjunction@1b6cb4c9

x2.unwrap

// res5: Boolean = true

Here’s conjunction:

// `class Conjunction(val unwrap: Boolean) extends AnyVal` doesn't work on mdoc

class Conjunction(val unwrap: Boolean)

object Conjunction {

@inline def apply(b: Boolean): Conjunction = new Conjunction(b)

implicit val conjunctionMonoid: Monoid[Conjunction] = new Monoid[Conjunction] {

def combine(a1: Conjunction, a2: Conjunction): Conjunction =

Conjunction(a1.unwrap && a2.unwrap)

def empty: Conjunction = Conjunction(true)

}

implicit val conjunctionEq: Eq[Conjunction] = new Eq[Conjunction] {

def eqv(a1: Conjunction, a2: Conjunction): Boolean =

a1.unwrap == a2.unwrap

}

}

val x3 = Conjunction(true) |+| Conjunction(false)

// x3: Conjunction = repl.MdocSessionConjunction@20ce751

x3.unwrap

// res6: Boolean = false

val x4 = Monoid[Conjunction].empty |+| Conjunction(true)

// x4: Conjunction = repl.MdocSessionConjunction@201bb9f7

x4.unwrap

// res7: Boolean = true

We should check if our custom new types satisfy the the monoid laws.

scala> import cats._, cats.syntax.all._

import cats._

import cats.syntax.all._

scala> import cats.kernel.laws.discipline.MonoidTests

import cats.kernel.laws.discipline.MonoidTests

scala> import org.scalacheck.Test.Parameters

import org.scalacheck.Test.Parameters

scala> import org.scalacheck.{ Arbitrary, Gen }

import org.scalacheck.{Arbitrary, Gen}

scala> implicit def arbDisjunction(implicit ev: Arbitrary[Boolean]): Arbitrary[Disjunction] =

Arbitrary { ev.arbitrary map { Disjunction(_) } }

def arbDisjunction(implicit ev: org.scalacheck.Arbitrary[Boolean]): org.scalacheck.Arbitrary[Disjunction]

scala> val rs1 = MonoidTests[Disjunction].monoid

val rs1: cats.kernel.laws.discipline.MonoidTests[Disjunction]#RuleSet = org.typelevel.discipline.Laws$DefaultRuleSet@464d134

scala> rs1.all.check(Parameters.default)

+ monoid.associative: OK, passed 100 tests.

+ monoid.collect0: OK, passed 100 tests.

+ monoid.combine all: OK, passed 100 tests.

+ monoid.combineAllOption: OK, passed 100 tests.

....

Disjunction looks ok.

scala> implicit def arbConjunction(implicit ev: Arbitrary[Boolean]): Arbitrary[Conjunction] =

Arbitrary { ev.arbitrary map { Conjunction(_) } }

def arbConjunction(implicit ev: org.scalacheck.Arbitrary[Boolean]): org.scalacheck.Arbitrary[Conjunction]

scala> val rs2 = MonoidTests[Conjunction].monoid

val rs2: cats.kernel.laws.discipline.MonoidTests[Conjunction]#RuleSet = org.typelevel.discipline.Laws$DefaultRuleSet@71a4f643

scala> rs2.all.check(Parameters.default)

+ monoid.associative: OK, passed 100 tests.

+ monoid.collect0: OK, passed 100 tests.

+ monoid.combine all: OK, passed 100 tests.

+ monoid.combineAllOption: OK, passed 100 tests.

....

Conjunction looks ok too.

Option as Monoids

LYAHFGG:

One way is to treat

Maybe aas a monoid only if its type parameter a is a monoid as well and then implement mappend in such a way that it uses the mappend operation of the values that are wrapped withJust.

Let’s see if this is how Cats does it.

implicit def optionMonoid[A](implicit ev: Semigroup[A]): Monoid[Option[A]] =

new Monoid[Option[A]] {

def empty: Option[A] = None

def combine(x: Option[A], y: Option[A]): Option[A] =

x match {